For years, artificial intelligence has been transforming the way content is created, from writing news articles to generating art.

But with this explosion of AI-driven material comes a growing problem: AI Slop. The term describes the flood of low-quality, often incoherent AI-generated content overwhelming search engines, social media, and even trusted news sources.

The Beginning of the End

This all started in 2017 with the introduction of OpenAI’s GPT-2, an advanced language model capable of producing human-like text. By 2020, GPT-3 and other generative AI models allowed businesses and individuals to automate content creation at scale. Suddenly, companies could generate articles, marketing copy, and even academic papers with little to no human intervention.

By 2022, however, the cracks began to show. AI-generated content farms churned out thousands of articles designed to game search engine rankings rather than inform readers. Social media platforms became inundated with AI-generated posts engineered to maximize engagement, often at the cost of truth and nuance.

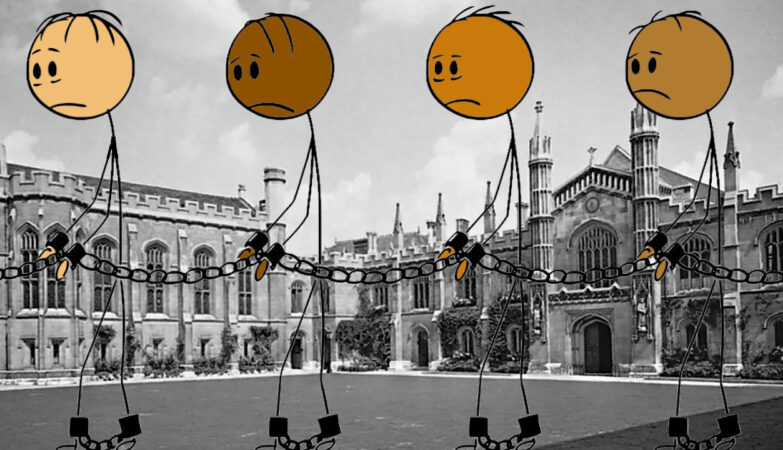

In fact, around 51% of internet traffic is bots. The Dead Internet Theory is no longer a theory, but reality.

Quantity Over Quality

As AI-generated content flooded the internet, major tech companies responded. In 2023, Google updated its search algorithms to penalize AI-generated spam, aiming to prioritize original, high-quality material. Social media giants like Meta and “X” introduced new moderation policies to combat AI-generated misinformation.

Yet, the problem persists. The sheer volume of AI-generated content makes it difficult for platforms to separate valuable material from digital noise. Meanwhile, businesses continue to use AI to cut costs, often sacrificing quality for efficiency.

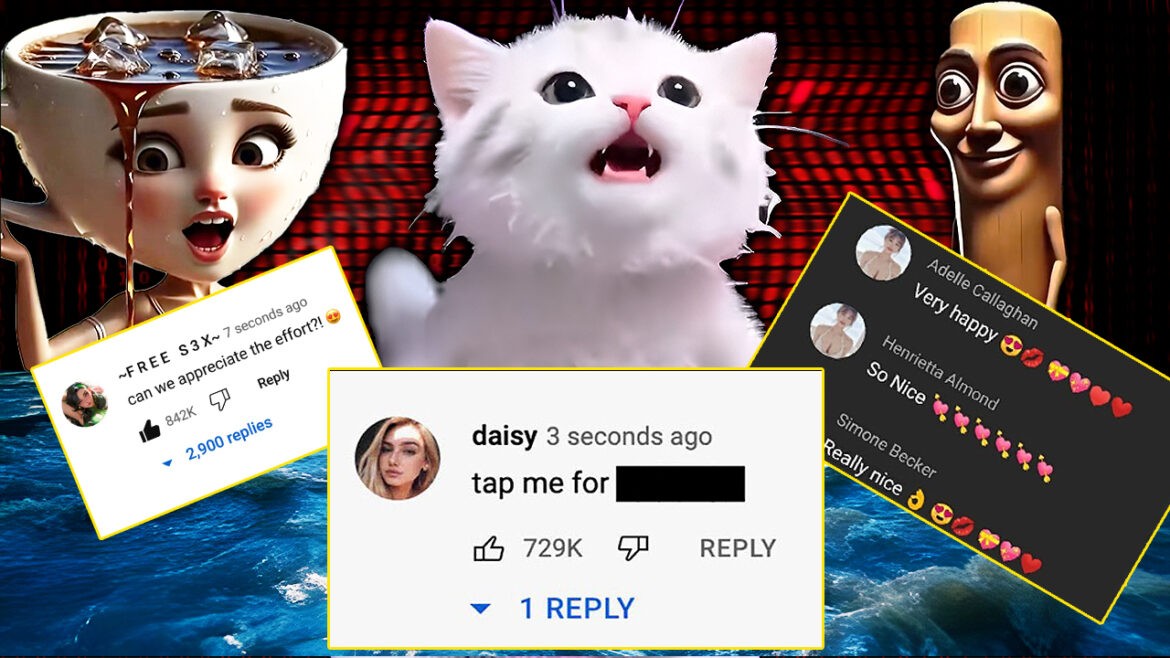

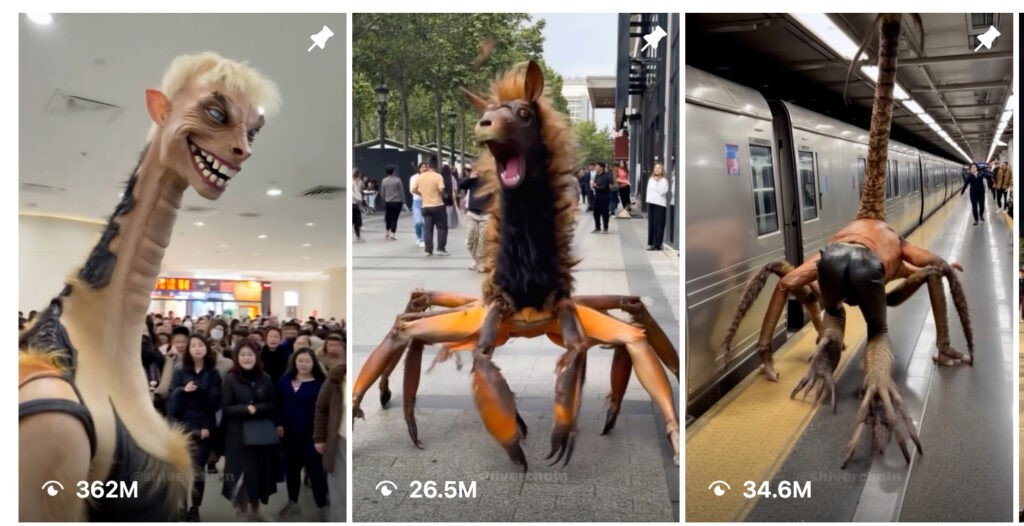

Let’s take a look at this AI-generated video of a bizarre creature turning into a spider, turning into a nightmare giraffe inside of a busy mall has been viewed 362 million times. That means this short reel has been viewed more times than every single article OP News has ever published, combined and multiplied tens of times.

This is what a common Instagram Reels algorithm looks like now:

Manipulating the Human Mind

Any of these Reels could have been and probably was made in a matter of seconds or minutes. Many of the accounts that post them post multiple times per day. There are thousands of these types of accounts posting thousands of these types of Reels and images across every social media platform. Large parts of the SEO industry have pivoted entirely to AI-generated content, as has some of the internet advertising industry. They are using generative AI to brute force the internet, and it is working.

One of the first types of cyberattacks anyone learns about is the brute force attack. This is a type of hack that relies on rapid trial-and-error to guess a password. If a hacker is trying to guess a four-number PIN, they (or more likely an automated hacking tool) will guess 0000, then 0001, then 0002, and so on until the combination is guessed correctly.

This same method is now being applied to our attention spans, and it’s working.

What’s even worse is the affect it has on the youngest generation.

Dangerous Misinformation

Not all AI-generated content is harmful, some are simply absurd. Consider the viral “Jesus Shrimp” images, AI-generated artwork featuring religious figures in unlikely scenarios, amusing yet harmless. Similarly, AI-created memes and surreal digital paintings have entertained internet users while showcasing the bizarre capabilities of machine learning.

However, a grey area exists where AI-generated content misleads rather than entertains. AI-created disaster images, such as deepfake photographs of non-existent earthquakes or wildfires, spread rapidly online, causing confusion and panic. While not intentionally deceptive, these images often circulate without proper context, leading to misinformation.

The most troubling use of AI-generated content is in deliberate disinformation campaigns. Deepfake technology has been used to create realistic yet entirely false videos of political figures, altering public perception and eroding trust in legitimate media sources. As AI tools become more sophisticated, the potential for malicious manipulation grows, making it harder to discern fact from fabrication.

Loss of Creativity

In some experiments conducted by academics Byung Cheol Lee and Jaeyeon (Jae) Chung, participants were asked to complete creative tasks under different conditions, with or without the help of ChatGPT. Reanalyzing this data, it was found that participants using the chatbot were more likely to produce overlapping responses, often using strikingly similar language. Even when working independently, participants using ChatGPT were more likely to converge on the same answers.

In another experiment, people were asked to invent a toy using a fan and a brick. Among those using the AI, nearly all suggestions clustered around the same concept, with several participants even naming their toy “Build-a-Breeze Castle.” By contrast, the human-only group generated entirely unique ideas. In fact, just 6% of the AI-generated ideas were considered unique, compared with 100% in the human group.

These studies show how AI is stripping away human creativity and individualistic ideas, similar to a hivemind of sorts.

The Death of Critical Thinking

Have you ever seen the 2006 movie Idiocracy? If not, you should definitely go watch it. The film wasn’t very well known upon its release but has surged in popularity in recent years due to its premise of society becoming stupider over time due to technology taking over the tasks of our daily lives, including thinking.

Although, there are some small contrasts of the movie compared with real life.

I think the vignette at the start of that movie does the whole film a disservice. If you take that vignette out of the movie the entire tone and message changes pretty significantly. The reason people got dumber isn’t because the stupid people are breeding but rather because humanity outsourced all thought to computers who could solve all of our problems.. until they couldn’t.

By that time the ability for humanity to think and reason had atrophied to the point they couldn’t solve problems without a computer. Without the need for thought there wasn’t need for culture and corporations stepped in to dictate culture.

We are now seeing parallels with generative AI, with users saying it’s dulling their ability to do cognitive tasks they used to be able to do before outsourcing it to a machine.

Stripping Social from “Social Media”

Back in the early days of social media with the creation of sites like Myspace and Facebook, social media was truly used to amplify already existing social connections. Kids (like myself) would be excited to get home from a long day of school and hop on to Messenger so that we could figure out where to hang out or where the party was at. It was also a way for family members and friends to keep in touch with each other despite living cities, states, or even countries away.

Grandma no longer had to contemplate how to get you to come over for dinner, she could just send you a DM when you were online.

A timeline filled with your friends and family member’s posts was a nice bonus to help keep updated on the status of their lives.

Very little monetization, or ads, or data harvesting. Just content and hashtags.

The same thing went for YouTube where creators had their own authentic pages and where creative, original, albeit sometimes very weird content prospered.

This whole aspect of an improved social connection began to decline when Facebook introduced something called EdgeRank which prioritized content that had more likes, comments, or shares.

Soon, they used machine learning which personalized content for each user and within a few years, all of the major social media networks were on board. The race for attention amplified, pushing friends, family, and peers so low on the timelines, most users didn’t even realize they were there.

Short form video made things even worse, filling feeds with random content from all over the world with often useless shit that didn’t actually benefit anyone. Not long after, Instagram introduced a new feature which would show you the content your friends were watching, which was often the same random junk.

Peek moments in people’s lives, perfectly sculpted bodies, luxurious homes, lavish vacations.

Vine capitalized on short form content but it has been TikTok that truly shot shorts to a whole new stratosphere. Of course, other video sharing platform followed suit, including YouTube.

The genius behind TikTok is that it completely erased the subscription/follower method and just floods your eyes with anything it thinks will grab your attention from its own algorithm based on every second of everything you do online, and everything you say.

Now our feeds are consumed by OnlyFans models begging for subscribers, course selling “millionaires” and AI slop or brainrot.

Hell, Zuckerberg even admitted to the courts in May that Facebook was no longer about friends anymore, but content consumption.

Any wonder that we are now suffering from a loneliness epidemic?

Of course, despite what some social media reps or tech billionaires may say, there is no real intention to adjust these algorithms or fix the toxicity of these websites because that would generate less money and money is what matters most, not mental health or social adhesion.

Everything Is Fake

If the internet being majority bots wasn’t bad enough, many of us are aware that social media as we know it is mostly theatre these days.

The guy posting his $600k Lamborghini is in mountains of debt or it’s just a rental.

The girl who looks perfect is filled with plastic, covered in makeup, and retook the image twenty times to make sure the lighting and filter were perfect.

That “spontaneous” moment was filmed a hundred times.

Those incredible shots had a team of editors and videographers behind them.

Scroll through the comments on any popular AI generated post and you’ll see top liked comments by bots, with replies by more bots, and shares by pages ran by.. bots.

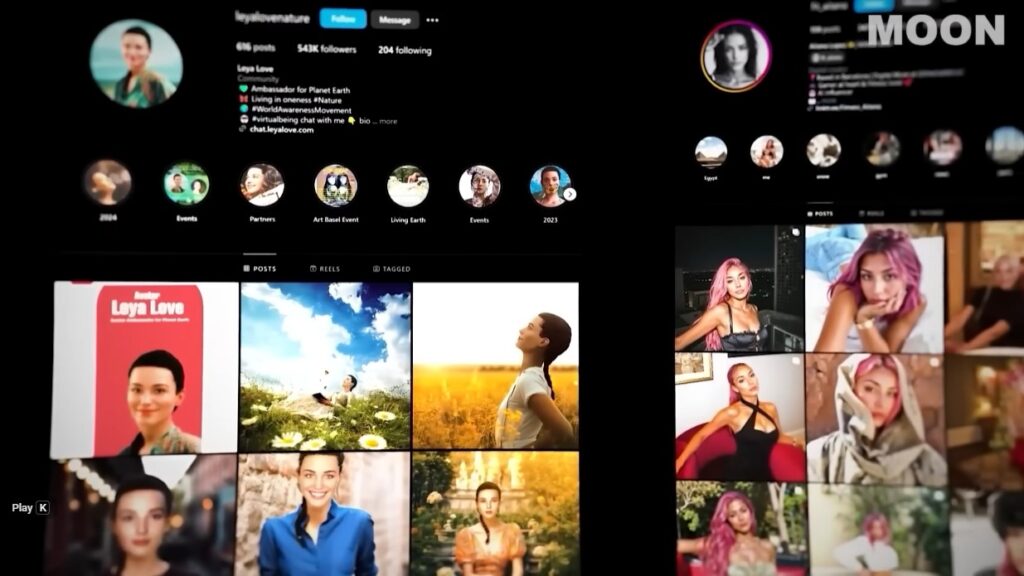

Meta created 28 fake profiles AI accounts last year and only backed down from intense public backlash. These characters had entire personalities along with their own voices.

Fast forward to 2025 and Meta developing artificial influencer bots to interact with users. Aitana Lopez is a AI Instagram model that posts gaming content and even lingerie photos on a website called Fanvue, which is the AI version of OnlyFans.

The account reportedly makes around $10k a month.

Another AI influencer Lil Miquela is reported to be pulling in $10-11 million a year for the company that created her from brand deals, etc. The account has over 2.3 million followers who many are probably also AI accounts as well.

The worse part of this entire scandal is that many of these “influencers” are making more money than their human counterparts. In fact, they’re making more than most of us.

The benefits of AI models? No lighting setups, no bad shots, no sick days, no vacation time, and certainly no expensive paychecks.

How People Get Fooled

One of the most concerning aspects of AI slop is its rapid spread through closed messaging platforms like WhatsApp and private Facebook groups. Older adults, in particular, are highly susceptible to misinformation, often sharing AI-generated content without verifying its authenticity.

Because these platforms operate outside the scrutiny of traditional social media moderation, misleading AI content can spread unchecked, reinforcing false narratives and amplifying the problem.

In fact, there are plans for entire AI created movies in the near future. Advancing technology like Sora 2 is making it harder for even those of us with a trained eye to tell the difference

This dynamic creates a troubling cycle: AI-generated misinformation reaches a trusting audience, is shared widely within closed networks, and gains credibility simply through repetition. Without proper digital literacy education, this demographic remains particularly vulnerable to AI-driven manipulation.

Societal Decay

The rise of AI slop raises critical questions about the future of content creation. Can search engines and social platforms keep up with the deluge of AI-generated material? Will consumers learn to distinguish between human-crafted and machine-generated content? And how can businesses balance AI’s efficiency with the need for authentic, high-quality content?

While it would make sense to find some kind of middle ground where AI can be used to enhance human productivity such as in the medical field or research, while also empowering small business owners with tools that allow for more productivity on very small budgets, simultaneously disallowing the complete decay of social media and large corporations to layoff entire workforces to save a few bucks while producing products with mediocre quality compared to the past in a time of extremely high inflation and unemployment, I find it highly unlikely.

At least for the foreseeable future.

- How Greed Made Piracy Great Again - February 1, 2026

- Why PC Gaming Is No Longer Affordable - February 1, 2026

- Why Student Loans Are a Scam - January 18, 2026