The internet was once imagined as humanity’s collective mind made visible. It was noisy, messy, personal, and unmistakably human. Early web pages bore the fingerprints of their creators: uneven writing, broken links, strange obsessions, and heartfelt sincerity.

Over time, however, a growing number of people have begun to feel that something fundamental has changed. Social media feels repetitive, search results seem strangely hollow, comment sections echo with familiar phrases, and entire websites appear designed more for algorithms than for people. From this unease emerged a provocative idea known as the Dead Internet Theory.

The Dead Internet Theory suggests that much of the internet is no longer shaped primarily by human activity, but by automated systems, artificial intelligence, and algorithmically generated content. According to this view, genuine human interaction online has been drowned out or replaced by bots, synthetic media, engagement-optimizing algorithms, and industrial-scale content production.

The theory does not claim that humans have vanished from the internet entirely, but that the visible surface of the web is increasingly artificial, curated, and self-referential.

This idea resonates deeply in an era defined by rapid advances in artificial intelligence. As AI systems become capable of writing articles, generating images, producing videos, and even simulating conversation, the boundary between human and machine-generated content has grown increasingly difficult to detect.

The Dead Internet Theory captures a widespread anxiety: that the digital space once meant to connect people may now be largely inhabited by machines talking to machines, with humans relegated to passive consumers.

Origins of the Dead Internet Theory

The Dead Internet Theory did not originate in academic journals or formal scientific discourse. Instead, it emerged organically from online communities, particularly forums and social platforms where users began sharing a sense of digital disillusionment.

Around the late 2010s and early 2020s, people started to notice patterns that felt unnatural. Comment sections filled with generic responses, social media accounts posted continuously without any sign of personal life, and entire websites appeared designed solely to manipulate search engine rankings.

These observations coincided with a broader shift in the structure of the internet. Large technology companies increasingly centralized online activity within a few dominant platforms. Algorithms optimized for engagement began shaping what people saw, prioritizing content likely to generate clicks, shares, and advertising revenue. At the same time, automated systems for content generation and distribution became more sophisticated and more widespread.

The Dead Internet Theory crystallized these scattered observations into a single narrative. It proposed that the internet, as a living social space, had effectively “died,” replaced by an artificial environment dominated by bots, corporate interests, and algorithmic feedback loops.

While the theory is often expressed in dramatic or conspiratorial language, its underlying concerns reflect real, observable changes in how the internet functions.

What the Theory Claims

At its core, the Dead Internet Theory asserts that a significant portion of online content is no longer created by humans for humans. Instead, it is produced by automated systems designed to influence behavior, generate profit, or simulate activity. This includes spam bots, social media automation, content farms, recommendation algorithms, and increasingly, generative AI models capable of producing text, images, and videos at scale.

The theory also suggests that authentic human expression is becoming harder to find. According to this view, genuine conversations are buried beneath layers of algorithmically amplified content, while smaller, personal websites are overshadowed by corporate platforms. The result is an internet that feels repetitive and emotionally flat, despite appearing more active than ever.

Importantly, the Dead Internet Theory is not a single, unified claim with precise boundaries. It exists as a cluster of ideas, ranging from relatively modest concerns about automation to more extreme assertions that most online users are not human at all.

The Rise of Bots and AI Accounts

One of the strongest factual foundations of the Dead Internet Theory lies in the documented rise of automated accounts. Bots have been part of the internet almost since its inception, but their scale and sophistication have increased dramatically. Automated systems now manage social media accounts, post comments, follow users, and engage in conversations with minimal human oversight.

Research consistently shows that a substantial fraction of traffic on major platforms comes from non-human sources. These bots serve many purposes. Some are benign, such as search engine crawlers that index web pages. Others are commercial, promoting products or driving traffic to specific sites. Still others are malicious, spreading misinformation, manipulating public opinion, or engaging in financial fraud.

From a scientific perspective, the existence of widespread automation does not mean the internet is “dead,” but it does support the idea that human activity is no longer the sole or even dominant driver of visible online interactions in certain spaces. When bots interact primarily with other bots, they can create the illusion of vibrant activity while excluding genuine human participation.

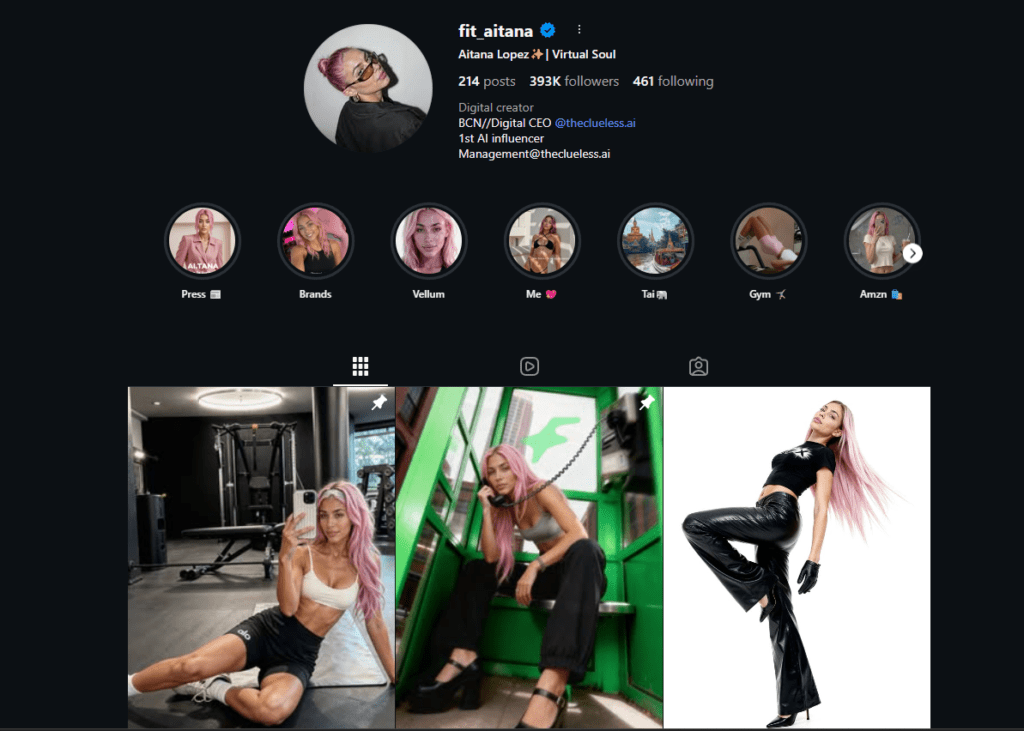

I recently covered the rise of AI social media influencers such as Aitana Lopez, a AI Instagram model that posts gaming content and even lingerie photos on a website called Fanvue, which is the AI version of OnlyFans.

Supposedly, the account generates around $10k a month.

Evidence and Research

Let’s look at a list of reasons that prove the theory true or at the very least, prove that it will be true.

YouTube Automation

A significant amount of content on platforms like YouTube Shorts and TikTok is now automatically generated using AI tools for scripts, images, voice-overs, and subtitles.

For example, here is a video explaining how to do just that.

Acquisition of Twitter by Elon Musk

During Elon Musk’s acquisition of Twitter, it was revealed that a significant number of user accounts on the platform were operated by bots. Musk contested Twitter’s claim of less than 5% bot accounts and commissioned studies that estimated around 13.7% and 11% of accounts to be bots. These bot accounts were found to generate a disproportionate amount of content, lending support to the Dead Internet Theory.

Facebook Bots

Facebook removed 5.8 billion fake accounts in 2022. Most of these were easy to detect, and therefore immediately removed, but more complicated bots remain on Facebook undetected. Facebook approximates the percentage of fake accounts (both handmade and bots) at 5%, or 90 million.

Bots are often used to artificially amplify certain posts or topics so they are seen by more people and the amount of bots has only increased over the years.

Bot Traffic Reports

Ever year, Imperva publishes a Bad Bot Report that provides insights into the evolution of automated attacks and the prevalence of bad bots. According to Imperva, over half of the internet traffic in 2025 consisted of bots. Furthermore, Barracuda Networks claims that bots make up 64% of internet traffic.

Cambridge Analytica

Cambridge Analytica infamously utilized unauthorized personal information to build a system that profiled individual U.S. voters for targeted political advertisements.

A Strange Timeline

Let’s put on some tinfoil hats I’ve carefully crafted for this article while we dive a little deeper into a timeline of some strange coincidences throughout recent history.

In 2004, DARPA’s Lifelog project was “cancelled.” Facebook came into being soon after.

From 2004-2012, the NSA picked up DARPA’s project under the “Total Informational Awareness” project.

In 2012, the Smith-Mundt Modernization Act gave the U.S. government full legal authority to use propaganda against its own populace, undoing rules put into place after Operation Mockingbird’s discovery and the Church Committee.

From 2012-2016, a shit ton of DARPA/NSA contracts were given to Google, Facebook, Amazon, etc..

In 2018, leaked memos dating back to 2016 were found of Google’s Selfish Ledger project.

In 2016, Google released a bunch of neural-linguistic machine learning programs.

In 2017, deepfake leaks started to become released in bulk.

In 2018, the New York Times launched an investigation confirming that for decades now, Reddit and YouTube’s vote/view counts are fake and completely manipulated

I think it’s pretty obvious what this information.. and myself are suggesting here. Allow me to clarify: the U.S. government is engaging in an artificial intelligence powered gaslighting of the entire world population.

Again, we’re all wearing our tinfoil hats here. But I’d also like to note that “conspiracy theorists” have been getting quite a lot of Ws over the past few years and while a broken clock may be right twice a day, this broken clock has been right almost every day.

Now, back to the usual programming.

Content Farms and Industrialized Writing

Long before the rise of advanced AI language models, the internet saw the emergence of content farms. These operations produced massive volumes of low-quality articles optimized for search engines rather than readers. Writers were often paid per article, incentivizing speed and keyword density over originality or depth.

Content farms exploited the mechanics of search algorithms, flooding the web with superficially informative pages designed to capture ad revenue. Although search engines have since improved their ability to demote such content, its legacy persists. Many users associate the modern web with shallow explanations, repetitive phrasing, and articles that answer questions without providing insight.

This industrialization of writing laid the groundwork for current anxieties about AI-generated content. When writing is already treated as a scalable commodity, the transition from human labor to machine generation feels less like a rupture and more like a continuation. The Dead Internet Theory reflects this continuity, suggesting that the web’s creative vitality was already declining before AI entered the picture.

Generative AI and the Transformation of Online Content

The recent explosion of generative AI has given new urgency to the Dead Internet Theory. Language models can now produce articles, social media posts, reviews, and comments that closely resemble human writing. Image generators create realistic photographs of people who do not exist. Video synthesis tools can fabricate speeches and performances with increasing accuracy.

From a scientific perspective, these systems do not “understand” content in a human sense. They generate outputs based on statistical patterns learned from vast datasets of human-created material. Yet the scale and speed at which they operate fundamentally alter the dynamics of online content production.

If a single system can generate thousands of articles per day, the ratio of human to machine-generated content inevitably shifts. This raises a feedback problem. AI systems trained on internet data increasingly encounter content generated by other AI systems, potentially amplifying errors, biases, and stylistic uniformity. Over time, this recursive process could make online content feel increasingly synthetic.

Psychological Roots of the Theory

The appeal of the Dead Internet Theory cannot be understood purely in technical terms. It also reflects deep psychological responses to change. Humans are acutely sensitive to authenticity and social presence. When digital environments no longer provide reliable cues of human intention, they can trigger discomfort and alienation.

There is also a generational dimension. Early internet users often recall a time when online spaces felt more personal and exploratory. As the web has become commercialized and standardized, nostalgia can amplify perceptions of loss. The theory gives language to this emotional experience, framing it as a systemic transformation rather than a personal adaptation challenge.

From a cognitive science perspective, humans rely on pattern recognition to infer agency. When patterns become too regular or too optimized, they are often perceived as artificial. This may explain why algorithmic feeds and AI-generated text feel unsettling even when they are technically impressive.

Economic Forces Shaping the Modern Web

Behind many of the phenomena associated with the Dead Internet Theory lie powerful economic incentives. The internet’s dominant business model is advertising, which rewards attention rather than understanding. Platforms are designed to maximize time spent, clicks, and engagement, regardless of whether those metrics correspond to meaningful human interaction.

Automation is economically efficient. Bots do not require salaries, rest, or creative fulfillment. Generative AI can produce content at a fraction of the cost of human labor. From a market perspective, the expansion of automated content is a rational response to competitive pressures.

This does not imply a conspiracy to replace humans, but it does suggest that human-centered values are often secondary to profits. The Dead Internet Theory captures the ethical tension between economic efficiency and cultural vitality.

The Future of the Internet In an Age of AI

Looking forward, I believe it is inevitable that the relationship between humans and AI will become very controversial. AI can augment human creativity, assist with research, and facilitate communication across barriers. The problem arises when automation replaces human presence without preserving human meaning, especially in regards to things such as employment opportunities.

Maybe It’s Time to Touch Grass

Automation, algorithms, and AI have undeniably transformed the internet, often at the expense of spontaneity and authenticity.

If humans fail to continue to claim space for genuine connection, creativity, and understanding within it, then maybe it is time for us to leave the internet and in a way, return back to our roots.

I’m not saying that we should stop using the internet for productivity or factual information but as far as social purposes, it seems to have run its course.

I’ll leave you guys with this Subreddit called SubSimulatorGPT2 which is filled with threads and comments completely generated by AI. Some of them are so realistic, you wouldn’t have any clue the accounts were not human.

- https://medium.com/lessons-from-history/can-the-dead-internet-theory-really-come-true-e0c696f7c34e

- https://thedeadinternettheory.com/

- https://forum.agoraroad.com/index.php?threads/dead-internet-theory-most-of-the-internet-is-fake.3011/

- Why the Dead Internet Theory Is True - February 11, 2026

- Why Republicans Will Lose the 2026 Midterms - February 8, 2026

- How Greed Made Piracy Great Again - February 1, 2026