Last month, YouTube was in the news yet again after famous content creator Bryant Moreland, known as EDP445 online, was caught trying to meet a 13-year-old in an online vigilante sting operation. Another YouTuber known for filming these “stings” released the footage, which show Moreland exchanging inappropriate texts with a decoy who was pretending to be 13 years old.

Back in 2019, Matt Watson hit the front page of Reddit with a video explaining a phenomenon he had discovered while on the video-sharing platform. Watson found that within a few clicks of normal, appropriate, and monetized (ad-sponsored) videos one could enter a wormhole of inappropriate, eroticized clips of girls as young as nine years old. In some instances, these videos were monetized, timestamped to flag suggestive moments in the videos, or had private, unlisted links that led invited users to view other pornography, sometimes of children. Reaction was swift. Such advertisers as AT&T and Epic Games quickly froze business on YouTube, which is owned by Google. The company promised to step it up with content and comment moderation by both human moderators and artificial intelligence. But it seems the same story keeps getting told: In 2017, YouTube dealt with the same problem, announced a slew of new moderation regulations, and declared the problem handled. It wasn’t.

But the sexual exploitation problem — particularly regarding children — is not just limited to YouTube. The National Center for Sexual Exploitation (NCOSE) puts together a yearly list of mainstream organizations that facilitate this exploitation dubbed the Dirty Dozen. On this year’s list? Twitter, Amazon, United Airlines, the gaming platform Steam, and more. To find out more, Fatherly talked to Haley Halverson, NCOSE’s Vice President who works on the Dirty Dozen list every year. Halverson spoke about how pervasive the problem of the sexual exploitation of kids is on the Internet, how even “safe” platforms are problematic, what can be done, and why certain companies will only take measures to rid these videos when they’re told.

Tell me about the Dirty Dozen list. How long NCOSE been putting it together?

The Dirty Dozen list started in 2013. Since then, it’s been an annual list. It’s 12 different targets. One of the requirements is that the companies listed are typically mainstream players in America — whether they’re a corporation or occasionally we’ll have an organization on there — that’s facilitating sexual exploitation. We try to have a variety of industries represented and we also try to have a variety of issues of sexual exploitation represented on the list as well. At NCOSE, one of our primary messages is that all forms of sexual exploitation are inherently connected to one another. We can’t solve child sexual abuse without talking about sexual violence, prostitution — all of these things feed into one another. We need to have a holistic solution. That’s why we try to have the dirty dozen list cover a wide variety of issues.

Looking at the list this year, probably 50 percent are media companies or websites. STEAM. Twitter. ROKU. Netflix. HBO. Why did these websites and platforms make the list?

We’ve got many companies, social media are on there, media/entertainment centers are on there. This is tough because I do them all and they’re all very different.

As far as HBO and Netflix, those are maybe the two most similar ones on our list. With them, we’re seeing that there’s really a lack of awareness and social responsibility in the media about how we portray sexual exploitation issues. For example, gratuitous scenes of sexual violence. HBO’s Game of Thrones has shown several rape scenes. There have been rape scenes on 13 Reasons Why on Netflix. In addition, both of these sites have either normalized or minimized issues like sex trafficking. Netflix has come under fire because they’ve now had at least two instances where they either portrayed underaged nudity or child eroticization and so we’re seeing that the media has a heavy role to play in our culture and when media is portraying these issues irresponsibly, it not only decreases empathy for victims, it sometimes eroticizes sexual violence or sends incorrect messages about the harms of commercial sexual exploitation.

What have you found on sites like Twitter, Steam, and Google?

For example, on Twitter, we know that not only is there a plethora of pornography, but there is a very real reality that people are using Twitter to facilitate sex trafficking and prostitution. Not only through direct messaging but also through finding each other on hashtags, through sharing photographs and videos. So the fact that Twitter is being used to facilitate sex trafficking and pornography is incredibly problematic.

On YouTube, everything came out this week about the #WakeUpYouTube scandal and how there are hordes of videos of young girls that are being eroticized by essentially pedophiles or child abusers in the comment sections, trading contact information with each other to network, and trying to get contact information from the girls so they can follow up with them and groom them on social media, supposedly for more sexual abuse. That is happening on Twitter as well. We’re seeing these large internet platforms just aren’t taking enough responsibility to address these very serious problems.

What do you think needs to happen?

YouTube right now is mostly relying on users to actually flag harmful content, and then YouTube has human eyes reviewing hours and hours of videos which are uploaded every minute. The same is true with Twitter. Millions of people are tweeting constantly. For them to have these really outdated systems is irresponsible. So, we think they need to take a more proactive approach in how their algorithms work, using better AI. They need to actually make dealing with sexual exploitation a priority, because right now it’s not. Right now they’re happy that people are commenting and that videos have high views and they are looking at the corporate profit bottom-line instead of, actually, the health and safety of their users. Right now, they’re okay with those types of messages proliferating as long as it doesn’t rise to the level of literal child pornography — but, that’s such a low bar. People are being groomed for abuse. They are being bought and sold on their platforms with or without actual child pornography being shared. That should not be the standard.

So, engagement is the bottom line for media companies. Whatever that engagement might mean probably isn’t relevant to YouTube unless they get called out on it. But this isn’t a new problem, right? This has been around since 2017.

That’s really the trend with YouTube. And it’s the trend with Twitter, and pretty much all of these companies. They’ll get slammed in the media, maybe they’ll do a little bit of cleanup for a short period of time around those specific videos they’re being called out about and then they just wait for us to forget and they go on with business as usual.

I saw EBSCO was on the Dirty Dozen list. EBSCO, in part, serves kids in k-12 schools. Is this information platform for research being called out for having comprehensive sex education? Is it sending school-aged kids to data on that? Or is it sending them to legit porn?

NCOSE does not get involved in sex education debates. We don’t have a bone or dog in that fight. So with EBSCO, that is in thousands of schools. There is actually pornography in the platform, including live links to pornography.

How does that even happen?

Yeah. It’s crazy. They have a lot of PDFs, and in the PDFs, they’ll have hardcore links. In early October 2018 we went through EBSCO and found live links to BDSM pornography sites, live links to very violent pornography sites, and the problem is that EBSCO is not recognizing that their ultimate user is children. It’s children that use their platforms. EBSCO, the whole point of EBSCO — this is the important thing — the whole point of having an educational database in our school is that it is safe, age appropriate, educational information. So if EBSCO can’t clean up its database in order to be that, then we might as well just put all these kids on Google. What’s the point of having an educational database unless it’s actually providing what they say they are?

That’s nuts. I would imagine it should be a closed, safe information system. When I went to college, we had JSTOR. That is only filled with verified academic materials. I would imagine EBSCO would be even more strict, especially when used for K-12 kids.

Yeah, they have a list of publishers that they work with. They take their content. Those publishers at least have included media outlets like Cosmopolitan Magazine and Men’s Health, which regularly have articles about pornography, normalizing prostitution, and about encouraging people to engage in sexting. That’s not educational information, particularly that a kid in elementary school needs to be accessing. The problem is they put up everything, at least from what I’ve seen, it appears they just put up everything publishers are sending in.

Granted, they’ve made a lot of progress. This was the EBSCO’s third year on the Dirty Dozen list, and when we were first working with them, I could type in the word “respiration” or “7th grade biology” and from that search you would be able to find hardcore pornography. That has now been greatly fixed. Their elementary school database is particularly improved, but their high school database still has a ways to go and has some work that needs to be done.

In terms of EBSCO, it makes sense to me that with Twitter and Netflix, that you can log onto these things and see what’s happening quickly. How did you discover this about this platform?

Fatherly started hearing about EBSCO from parents around the country, because children were stumbling across this content. And really, parents are horrified when they realize this is a problem, and they should be. If EBSCO isn’t going to clean up their services then they should at least warn parents that their kids are probably going to be exposed to graphic content.

What about Steam? That’s an online video game platform that a ton of 18 and under kids use. What goes on on that platform that is problematic?

Steam was on the Dirty Dozen list in 2018. They first came to our attention because there was a rape-themed game called “House Party,” where the entire theme of the game is to walk into a house and have sex with all the women who are there and you do this through getting them drunk, sometimes lying to them, sometimes blackmailing them. There are different categories. Not only is that bad in and of itself, but it was animated pornography that included penetrative sex acts.

That’s what got Steam onto the list. As Fatherly looked, they saw they had a large number, an increasing number of games similar to that. There was some progress. They started to remove some of these games. But then, in June of 2018, they flipped and made a new policy to allow everything onto the Steam store except for things that were illegal. When that happened, the number of games that were tagged for nudity or sexual content doubled from 700 games to 1400 in just over four months. Now there are over 2,000 games with this tag. So, not only is Steam facilitating the increased trend of pornified video games, which often gamify sexual violence and coercion, but also their filtering and parental controls are basically non-existent, even with the filters on you’re just a few clicks away from being able to access pornographic or sexually graphic games.

What can YouTube do? What can any of these platforms really do?

For each target, Fatherly wrote them a letter and explain to them the reason they’re on the dirty dozen list and asked them for specific improvements. For YouTube, one of the biggest things is Fatherly just wanted them to be more proactive in how they are policing their site. They need to use AI, update their algorithms. They already have algorithms that can detect when a number of predatory comments are being made on a video.

About a couple years ago or so, there was a YouTube announcement where they said one of the ways they were getting tougher to protect families on YouTube was by disabling comments on videos where those comments were becoming increasingly predatory. Fatherly did a deep dive into the YouTube scandal in 2019 and pulled together proof of what was going on. The fact that a lot of those videos have the comments disabled means that the YouTube algorithm recognizes that there were a lot of predatory comments happening.

But the only action they took was to disable the comments. They still left the video up — videos of very young girls, 9 or 11 years old, being eroticized by men. They got hundreds of thousands of views. I saw multiple videos with two million views. That’s putting that child at risk. Even if you are disabling the comments. They need to take a more proactive approach to keep people on their platform safe instead of just prioritizing engagement.

Some of the kids in these videos are clearly under 13. Even when I was a kid, I lied about being 13 to get on the Internet.

Yeah, kids just lie. These social media companies need to be more conscious about their platforms, and not less conscious about their platforms.

Twitter Exposed

In late 2019, it was reported that Twitter has suspended journalist Andy Ngo for tweeting inconvenient facts about the purported “epidemic” of transgender deaths, and undercover journalism organization Project Veritas from running recruitment ads. But if you’re a pedophile who wants to discuss your attraction to minors — Twitter’s just fine with that.

Big League Politics discovered a little-noticed, quietly enacted Twitter rule change from March, which says “Discussions related to child sexual exploitation as a phenomenon or attraction towards minors are permitted, provided they don’t promote or glorify child sexual exploitation in any way.”

So, if you post politically inconvenient yet accurate statistical facts, as Ngo did, you get suspended. But if you discuss “attraction towards minors,” Twitter explicitly allows it.

It goes without saying that Twitter’s policy did not arise out of some blanket commitment to free speech. Twitter CEO Jack Dorsey has specifically rejected such commitments, rubbishing the idea that Twitter is the “free speech wing of the free speech party,” as one VP at the company described it in 2012.

In an interview with Wired last year, Dorsey said that the “free speech wing” comment was a “joke” that was taken too seriously.

“This quote around ‘free speech wing of the free speech party’ was never, was never a mission of the company. It was never a descriptor of the company that we gave ourselves,” said Dorsey.

The Twitter CEO continued, saying that a commitment to free speech “comes with the realization that freedom of expression may adversely impact other people’s fundamental human rights, such as privacy, such as physical security.”

In other words, Twitter’s decision to allow pedophiles on its platform is something they’ve chosen to do, not something they have to do.

In the worldview of twitter, a journalist posting statistics that contradict the intersectional left is somehow a threat to human rights, privacy, or physical security. But allowing pedophiles to openly organize isn’t.

Pedophiles to Discuss ‘Attraction Towards Minors’ on Their Platform

Social media giant Twitter quietly amended their terms of service to allow for “discussions related to… attraction towards minors” to be allowed on their platform.

“Discussions related to child sexual exploitation as a phenomenon or attraction towards minors are permitted, provided they don’t promote or glorify child sexual exploitation in any way,” reads Twitter’s terms of service.

Twitter allow noted that they would allow for nude depictions of children on their platform in certain instances.

“Artistic depictions of nude minors in a non-sexualized context or setting may be permitted in a limited number of scenarios e.g., works by internationally renowned artists that feature minors,” they added.

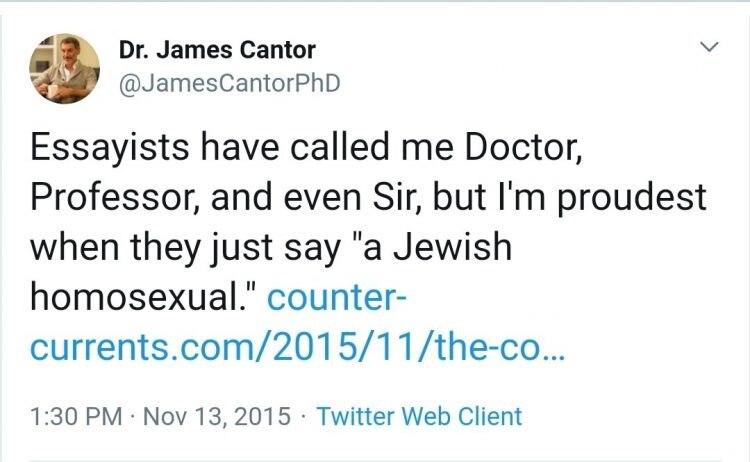

Twitter’s pro-pedo policy may have been implemented at the behest of Dr. James Cantor, who describes himself as a proud Jewish homosexual. Cantor is a leading researcher and advocate for pedophiles, who he refers to as minor-attracted persons.

Cantor wrote a letter in Jan. 2018 to John Starr, who works as Director of Trust and Safety with Twitter, with other university academics. The letter urged Twitter to allow pedophiles to network and discuss their attraction to children on the monolithic social media platform.

“Many of us have worked with a group of such non-offending pedophiles, also known as anti-contact MAPs (Minor-Attracted Persons), in a peer support network called Virtuous Pedophiles (@virpeds), as well as in other support networks,” they wrote in their letter.

“Recently, a prominent member of Virtuous Pedophiles, who goes by the pseudonym Ender Wiggin (@enderphile2), had his account permanently suspended by Twitter. At least one other member of the same network, Šimon Falko (@simgiran), also had his account permanently suspended around the same time, and a number of other accounts of non-offending, anti-contact MAPs have since been permanently or temporarily suspended,” they added.

They argued that denying pedophiles a place on the social media platform may lead to these so-called virtuous pedophiles acting on their impulses to sexually victimize kids.

“In our professional opinions, terminating the accounts of non-offending, anti-contact MAPs is likely to result in the opposite effect of that which Twitter may expect or intend. Rather than reducing the incidence of child sexual abuse, if anything, it increases the risk that some pedophiles will be unable to obtain the peer or professional support that they may need in order to avoid offending behavior. It is also likely to increase the stigma and isolation associated with pedophilia and thereby increase the likelihood of some MAPs acting on their sexual feelings,” they wrote.

While conservatives, libertarians and Trump supporters regularly get booted from Twitter for constitutionally-protected speech, Twitter actively harbors pedophiles and their enablers. Big Brother is not looking out for the sanctity of the children.

Facebook Exposed

Over the years, Facebook has come under fire for not doing enough to police secret groups that trade child porn on the network. And in a disturbing twist, Facebook seems to be making the problem worse. back in 2017, when BBC journalists discovered child porn on the network and sent those images to Facebook last week, the company reported the BBC to police in the UK for the distribution of illegal images.

The BBC has been investigating secret child porn rings on Facebook for years. And last week a representative from Facebook, Simon Milner, finally agreed to sit down for an interview about moderation tools on the network. There was just one condition: Facebook asked that the BBC reporters send the company images that they’d found on Facebook’s secret groups that the BBC would like to discuss.

The BBC journalists sent Facebook the images they had flagged from private Facebook groups. And not only did Facebook cancel the interview, the company reported the journalists to the police.

“It is against the law for anyone to distribute images of child exploitation. When the BBC sent us such images we followed our industry’s standard practice and reported them to CEOP [Child Exploitation & Online Protection Centre],” Facebook told Gizmodo in a statement. “We also reported the child exploitation images that had been shared on our own platform. This matter is now in the hands of the authorities.”

Gizmodo spoke with Facebook’s press team in the UK but they would not discuss the specifics of the case on the record beyond the prepared statement they released. Simon Milner, current policy director at Facebook, did not respond to Gizmodo’s request for comment this morning through Facebook. Milner used to work for the BBC, according to his public Facebook page.

Using Facebook’s own moderation tools, the BBC’s recent investigation attempted to report 100 images that appeared to violate Facebook’s terms of service for sexualized images of children. The BBC found that just 18 of the 100 were eventually taken down on Facebook.

Furthermore, Facebook’s rules forbid convicted pedophiles in the UK from having accounts. After positively identifying five pedophiles on the site and notifying Facebook, the BBC reports that no action was taken.

“The fact that Facebook sent images that had been sent to them, that appear on their site, for their response about how Facebook deals with inappropriate images… the fact that they sent those on to the police seemed to me to be extraordinary,” BBC’s director of editorial policy, David Jordan, told his own news outlet.

Gizmodo asked the UK’s National Crime Agency (NCA) about the case of Facebook reporting BBC journalists for doing their jobs and was sent boilerplate language about how important it is for anyone who may accidentally stumble upon child porn on social media platforms to report that to the police.

“It is vital that social media platforms have robust procedures in place to guard against indecent content, and that they report and remove any indecent content if identified. Social media platforms should also provide easy to use and accessible reporting mechanisms for their users,” the NCA told Gizmodo in a statement.

But the agency wouldn’t comment on this specific case and whether or not the BBC journalists are now being investigated for the distribution of child pornography.

“The NCA does not routinely confirm or deny the existence of specific investigations, nor receipt of specific reports,” the NCA said.

“We have carefully reviewed the content referred to us and have now removed all items that were illegal or against our standards,” Facebook told Gizmodo in a statement. “This content is no longer on our platform. We take this matter extremely seriously and we continue to improve our reporting and take-down measures.”

“Facebook has been recognized as one of the best platforms on the internet for child safety,” Facebook’s statement continues.

It could not be determined by press time whether Facebook is indeed “one of the best platforms on the internet for child safety.” What could be confirmed, however, was that Facebook reported journalists to the police who were making a good faith effort to expose illegal images on their own platform.

Preliminary report on child pornography being distributed via Facebook chats and groups

A violent and shocking report has been circulating the internet – it is written by a collective named Army of Angels: a group of vigilantes who infiltrate Facebook’s darkest corners to find and destroy indecent material involving minors. It is with great sadness and astonishment, that Foundation for Dis-Infestation comes to show a censored version of this report, which exposes what is, actually, welcomed in the platforms we use daily and that are easily accessed by children, unwillingly.

The following document is of EXTREME GRAPHIC NATURE, even censored – please, discretion is advised and the most sensible should, definitely, stay away from the horror-show we’re about to show you. This report exposes what Facebook allows online while banning memes about Coronavirus or the Race-Wars, leaving behind a serious pandemic that proliferates on private chat-groups, public groups and pages and, even, personal profiles.

This document was banned from Facebook, while most of the original indecent photographs remain there. Please, do not shoot the messenger: these are the people trying to end the exploitation of children online and there is no other way to show the core of an issue than showing it the way it really is. Some people may find it unethical but, what is really unethical here? Exposing the monsters or that this content is, not only allowed but, hard to denounce?

Censorship of anti-pedophile groups and hunters

Check out his article from Direct to the People back in 2018, when Facebook really ramped up their protection of child predators and the #LGBTP community.

A few years back I was getting weary of the intellectual dishonesty of liberals commenting on my page. So I began to require liberals to agree that black men committed more crime than non-black men. And admit that Muslims commit more terrorist acts than non-Muslims. It was an intellectual honesty test under this picture:

Few liberals passed the test.

But I did have one liberal person comment and definitively say that Muslims do not commit more terror attacks. He was very matter-of-fact about it, different from the other liberals using emotional language. When I refuted his points with facts, I was banned almost immediately. I believe this person was a worker at Facebook, there was no way he could have submitted a report and had Facebook review it so quickly. I don’t remember his name and when I returned after my ban, the original post about rules had been removed.

Was that a Facebook employee protecting criminality and terrorism?

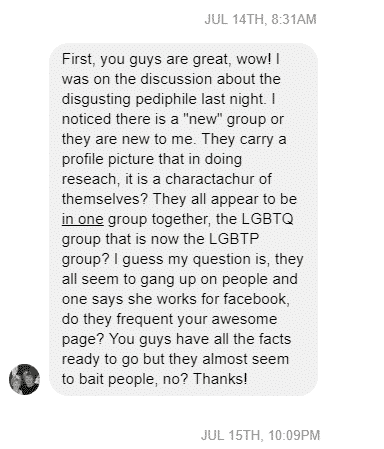

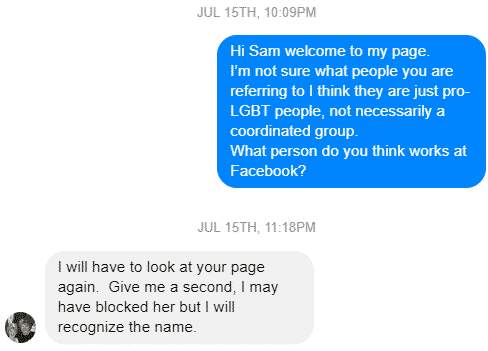

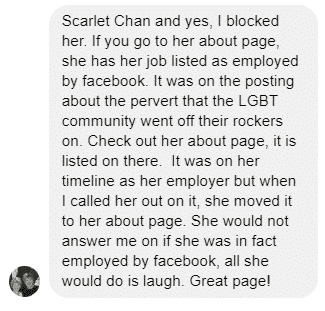

There was another Facebook employee who allegedly attacked my page not too long ago. Someone private messaged me about it:

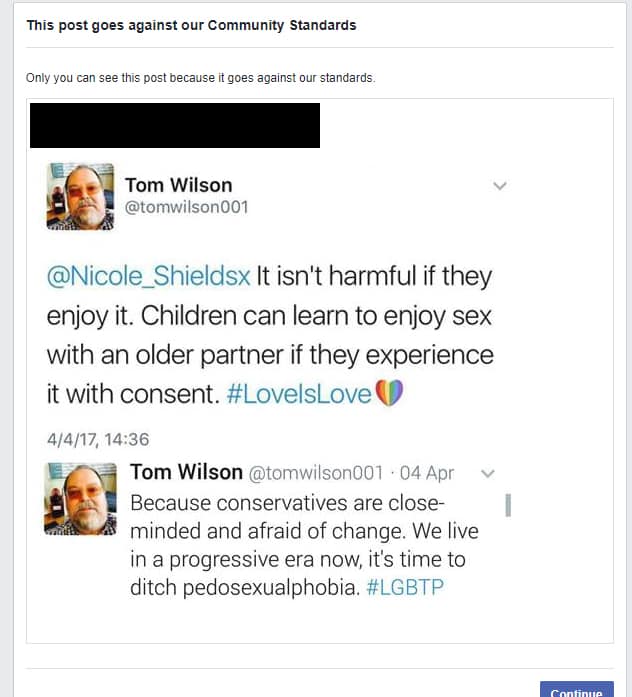

I never saw Scarlet Chan or know if someone by that name works for Facebook. There were hundreds of comments and I had not read them all. But I do find it suspicious. As I had the meme she was commenting on deleted shortly thereafter. This was the meme:

2.4 million people had seen this at the time it was deleted.

Why was it deleted? It calls attention to pedophiles and their attempts to normalize pedophilia. From the comments section, most liberals agreed this guy was in the wrong. Great. Let’s unite against pedophilia right?

I guess not, Facebook didn’t think so. Why is Facebook protecting pedophile ideas?

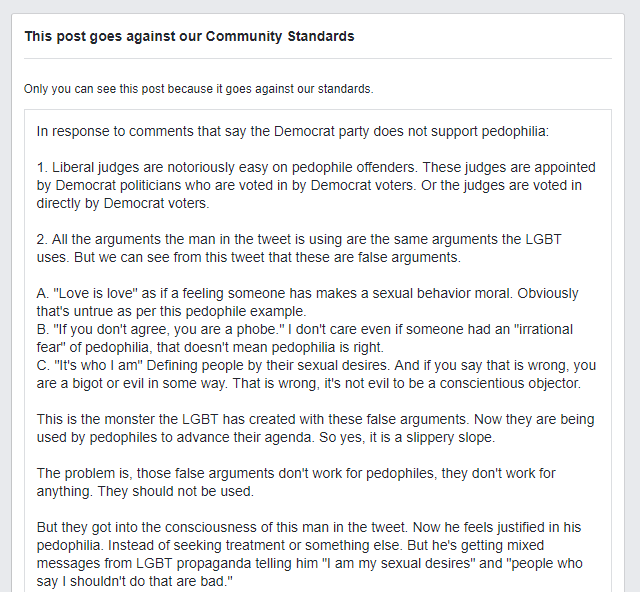

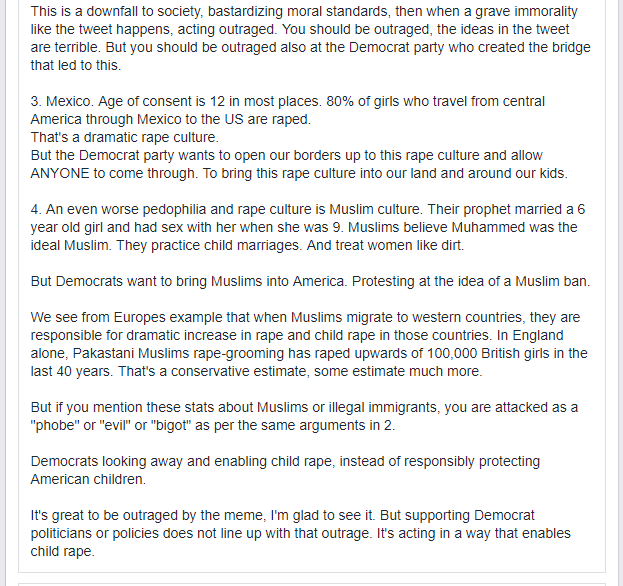

2 days before they deleted the meme, Facebook deleted a comment I made on the meme and banned me for 3 days. Here is the deleted comment:

Someone at Facebook deleted that comment. Facebook decided to defend:

- The pedophilia problem in Muslim culture.

- Pedophilia problem in Mexican culture.

- Liberal judges who are soft on pedophiles.

- The arguments the man in the meme made in support of his pedophilia.

What worse things could Facebook defend?

A few months ago, Facebook banned me for another meme that called attention to pedophilia:

So let me get this straight liberal Facebook:

Pizzagate is not real. “Nothing to see here.”

Except when I post pictures connected to Pizzagate, they are so obviously suggestive of pedophilia that they must be deleted from Facebook?

You can’t have it both ways! These are some of the more tame pictures pointing to Pizzagate by the way. There are much sicker pics floating around.

Again, Facebook covering for pedophiles.

What is the deal here?

I know what it is.

It’s the liberal mentality taken to its natural conclusions.

Liberalism says it’s mean, racist, bigoted, hateful, etc to criticize people or people groups for wrongdoing. Putting people’s feelings on a pedestal. Rather than putting goodness on a pedestal. Liberals sacrifice goodness on the altar of feelings. The results are goodness decreases, evil increases.

It’s not hateful to point out wrongdoing. Parents point out the wrongdoing of their kids all the time. Does that mean parents hate their kids? Of course not.

This is the fundamental error of liberalism and it appeals to the disobedient brat inside of each of us. “You’re not wrong for disobeying your parents, your parents are just mean.”

YouTube Exposed

Back in 2019, YouTube was in crisis mode yet again.

On Feb. 21st, the online video giant announced it had banned more than 400 channels and disabled comments on tens of millions of videos following a growing YouTube controversy concerning child exploitation. However, many major brands, like Disney, AT&T, Nestle, and Fortnite were jumping ship and halting all YouTube advertising in its wake.

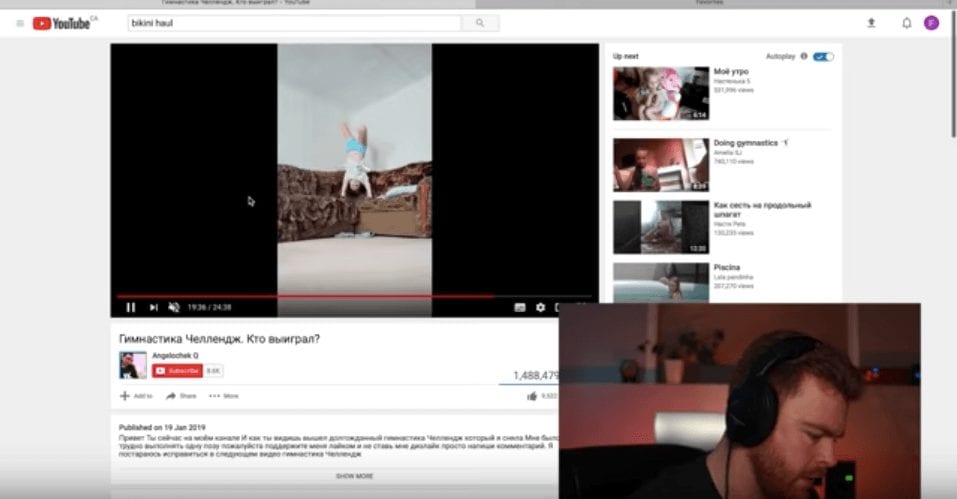

Over the weekend, a YouTube video highlighting child predators’ rampant use of the platform had gone viral. YouTuber Matt Watson posted the video walking users through how a simple YouTube search can easily uncover “soft” pedophilia rings on the video service.

Child exploitation is clearly an internet-wide issue, however, Watson’s video explored how YouTube’s recommendation algorithm works to add a unique problem for the site. As shown in Watson’s viral upload, a search for popular YouTube video niches, such as “bikini haul,” leads the platform to recommend similar content to viewers. If a user clicks on a single recommended video featuring a child, the viewer can get sucked into a “wormhole,” as Watson calls it, where YouTube’s recommendation algorithm will proceed to push content to the viewer which strictly features children.

These recommended videos then become inundated with comments from child predators. Often times, these comments directly hyperlink timecodes from within the video. The linked timecodes often forwards a user to a point in the video where the minor may, innocently, be found in compromising positions. On these video pages, these commenters also share their contacts so that they can trade child pornography with other users off of YouTube’s platform.

On top of all of this, Watson pointed out that many of these videos were monetized. Basically, big brands’ advertisements were appearing right next to these comments.

The video quickly went viral on Reddit and viewers quickly started spreading the hashtag #YouTubeWakeUp across social media, urging the company to take action.

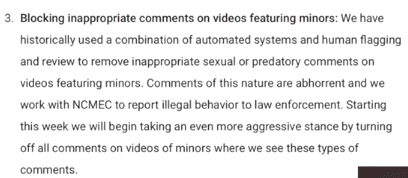

UPDATE: @YouTube @YTCreators left a comment and provided an update on what they’ve done to combat horrible people on the site in the last 48 hours.

TLDR: Disabled comments on tens of millions of videos. Terminated over 400 channels. Reported illegal comments to law enforcement. pic.twitter.com/zFHFfkX9FD— Philip DeFranco (@PhillyD) February 21, 2019

While many popular creators covered the issue, PhillyD received a comment from the official YouTube channel after he posted his video.

“We appreciate you raising awareness of this with your fans, and that you realize all of us at YouTube are working incredibly hard to root out horrible behavior on our platform,” said YouTube in their comment.

“In the last 48 hours, beyond our normal protections we’ve disabled comments on tens of millions of videos,” the statement continued. “We’ve also terminated over 400 channels for the comments they left on videos, and reported illegal comments to law enforcement.”

This wasn’t the first or last time YouTube had been accused of having child exploitation issues. In 2017, a slew of problematic videos were found on the platform, specifically marketed to children — often times directly on the YouTube Kids app. These videos presented popular children’s characters with adult themes, sometimes in sexually explicit fashion.

In response, YouTube overhauled its kids app and put new moderation policies in place.

The Not-So-Secret pedophile ring your child is most likely a part of

We know that it doesn’t take much in this new technological age to find pornography. Whether you’re looking for it or not, the mere use of social media makes you a target, and it’s a growing concern among parents of teens as the internet continues to grow more dangerous than ever before.

Forever Mom has talked about the secret codes and hashtags the pornography industry is using on popular apps like Instagram and Snapchat. But a new study is warning parents of the dangers of sexual exploitation that is being monetized on YouTube.

YouTube vlogger, MattsWhatItIs took to the video streaming platform on Sunday with a disgusting warning to parents about just how easily your child could become a victim of sexual exploitation by using the site.

“Over the past 48 hours I have discovered a wormhole into a soft-core pedophilia ring on Youtube,” Matt says at the beginning of the video, which has been viewed over 1.3 million times since it was posted on Sunday. “Youtube’s recommended algorithm is facilitating pedophiles’ ability to connect with each-other, trade contact info, and link to actual [Child Pornography] in the comments.”

The way YouTube’s algorithm works by tracking your search, and then suggesting content you may be interested in watching. The recommendations keep you on the website longer, allowing YouTube to make more money from the ads you’ll see, and continue tracking your viewing trends to provide the best user experience.

YouTube’s algorithm, as Matt explains, has a loophole, which pedophiles have used to prey on children, swap information, and use as a launching pad for child porn and exploitation. And they’re using our kids’ YouTube videos to do it.

What’s worse, is that, because of this glitch in the algorithm, YouTube is actually making money off of the videos being used to exploit children in this way. Advertisements for brands like McDonald’s, Disney, Lysol, Reese’s and more will appear to the viewer. When those ads are viewed, it’s change in YouTube’s pockets.

“What this is, is child exploitation,” Matt says, before demonstrating just how easy it is for you child to become a victim.

Using a brand new, never before used YouTube account, Matt dives in to show viewers just how easy it is to enter the “wormhole” that is YouTube’s pedophile ring.

Using an innocuous search, Matt is directed to one video, and then another. In just two clicks, the suggested videos have shifted.

“Two clicks,” Matt explains. “I am now in the wormhole…look at this sidebar. There is nothing but little girls.”

Time Stamping

As Matt scrolls through the comments, he begins to explain just how these pedophiles operate. They use a technique called time stamping to communicate to each other various points in the video where little girls are in “compromising positions,” or “sexually implicit” positions.

Parents, understand this: these are NOT racy videos of little girls who got on the webcam when their mom wasn’t home and tried to seduce an old man. They’re purely innocent. Girls doing a cartwheel or sitting on the floor with her legs crossed…one wrong move, and she’s time stamped, making it easy for hundreds, if not thousands of other pedophiles to be directed to that exact point in the video.

It’s time stamping that takes a child from doing a hand stand on her couch to becoming a victim of sexual child exploitation.

“One of the prominent things that I want to point out is the fact that once you enter into this wormhole, there is no other content available. Once you enter into this wormhole for whatever reason, YouTube’s algorithm is glitching out to the point that nothing but these videos exist. This facilitates the pedophiles’ ability to find this content.”

Of course, there is advertising on some of these videos which, in the simplest of terms means that YouTube is facilitating pedophilia, and making money off of it.

In 2017, the video streaming giant released a statement saying they’re “toughening their approach” to protect children and families using the platform. One of the ways they claimed to be doing this was by disabling the comments on videos of minors that appear to have inappropriate sexual or predatory comments.

“This is significant,” Matt says, “Because that means that we know that YouTube has an algorithm in place that detects some kind of unusual, predatory behavior on these kinds of videos. And yet, all that is happening is the comments are disabled?”

Matt points back to the wormhole saying that disabling the comments doesn’t do anything when the wormhole keeps users stuck on a never-ending cycle of little girls.

“How can no one see, once you are in this loophole, there is nothing but more videos of little girls. How has YouTube not seen this?” He continues, “we have nothing but videos that pedophiles are uploading, collecting, and doing whatever with.”

To YouTube’s credit, Matt says that when he reported comments and inappropriate behavior, the platform did remove it. However, the user accounts associated with links to child pornography, time stamping, and inappropriate comments, remained active.

Challenges

Matt says that these pedophiles use the comments to connect with the girls in the video and others like them. They then prompt them to do “challenges.” Again, things that seem totally innocuous to a young girl, disgusting pedophile men are giving unsolicited direction and getting exactly what they want.

Examples from the video include popsicle challenges, yoga challenges, twister challenges,

These challenges are beyond disturbing, and they’re being produced and reproduced in droves online.

The thing about this, is that searching something like a yoga challenge, is totally normal. These predators aren’t looking for child pornography, and they’re not searching inappropriate terms. Yet YouTube is chalk full of it.

“Exploitation is happening right underneath people’s noses right here on YouTube, and it’s disgusting, and no one is doing anything about it,” Matt says.

Parents, we know that the internet is not a safe place for kids. We know that children should not be posting videos or actively chatting on the inter webs. We know these things, but most of our children are on YouTube. They watch the videos, they follow the influencers, and many want their own shot on the other side of the screen.

Stay vigilant mamas. Educate yourself and stay in-the-know with what your kid and their friends are up to online.

Why Twitter, Facebook, and Google don’t want to be media companies

If Twitter, Facebook, and Google were media companies, they would be held 100% accountable for the content that appears on their platforms. Under Section 230 of the Communication Decency Act, providers of “interactive computer services” like these tech giants are largely immune from liability over content posted by others.

If they were media companies, it would invite regulation and more investment in terms of sophisticated algorithms and hiring more humans—never mind difficult decision-making. It would also make these platforms much more susceptible to litigation.

Every single one of those items would require significant expenditures that would lower margins. That would make the companies less valuable and presumably lower their P/Es into the range of the old media companies’.

So you can see why executives at social media companies go to great lengths to describe themselves as open, agnostic, even magical platforms with little responsibility for their content except in very obvious cases such as calls for murder and the sale of opioids.

But now Facebook, Alphabet, and Twitter are discovering it’s much more complicated than that. You see it in headlines every day: Pinterest recently stopped allowing for searches on vaccines as it was enabling the anti-vax crowd.

And speaking of Google, it recently had to work to rid its comments section on YouTube of content that was linked to pedophiles. And there’s Alex Jones and myriad others who’ve been banned from Twitter. I find this Wiki page list of Twitter suspensions fascinating.

Save the children

- https://www.breitbart.com/tech/2019/11/26/twitter-allows-pedophiles-to-discuss-attraction-to-minors/#

- https://bigleaguepolitics.com/twitters-terms-of-service-explicitly-allows-pedophiles-to-discuss-attraction-towards-minors-on-their-platform/

- https://www.fatherly.com/love-money/youtube-scandal-pedophile-dirty-dozen/

- https://finance.yahoo.com/news/google-facebook-twitter-media-165635516.html

- https://gizmodo.com/bbc-tells-facebook-about-child-porn-on-the-network-fac-1793033881

- http://www.directtothepeople.com/facebook-censors-protect-pedophiles-criminality-and-terrorism/

- https://foundationfordisinfestation.com/2020/07/19/facebook-is-allowing-child-pornography-and-protecting-predators-facebookgate/

- https://dailycaller.com/2019/05/05/youtube-pedophile-community/

- https://mashable.com/article/youtube-wakeup-child-exploitation-explained/

- https://foreverymom.com/family-parenting/safety/warning-youtube-sexual-exploitation-children-being-monetized/

- Never Forget: The Sandy Hook Hoax - November 1, 2022

- Was the Diary of Anne Frank Just Another Jewish Lie? - October 29, 2022

- Read: “Beto” O’Rourke’s Sick Teenage Fantasy Writings of Murdering Children - October 27, 2022